When ‘optimizations’ backfire: a wordle bot competition and a surprising probability lesson

Discover surprising probability insights from a Wordle bot competition, where a risky optimization strategy led to unexpected lessons.

In a recent wordle bot competition, participants were tasked with writing a program to guess the correct word as quickly as possible. While attempting to improve my bot’s performance, I stumbled upon an interesting probability problem involving collisions. In this post, I’ll share the insights I gained from the Wordle competition and the fascinating probability lesson I learned along the way.

The wordle bot competition and the probability problem

A risky optimization strategy that needed evaluation

The wordle competition had a simple premise: create a bot that could accurately guess a word from a list of 12,966 allowed words, of which 2,309 were candidate solutions. Each participant’s bot had to play 50 consecutive games, with the goal of minimizing the number of guesses needed to win each game.

As I worked on improving my bot’s performance, I encountered a dilemma. I wondered if I could improve my bot’s chances of success by removing a word from the candidate list once it had been identified as a solution in a previous game. However, this “optimization” posed a risk. If the same word appeared as a solution in multiple games, my bot would be unable to find it after removing it from the candidate list, ultimately causing a loss.

To assess the viability of this strategy, I needed to determine the probability of encountering duplicate solutions in the first 50 games. A simple calculation might suggest a 2% probability (50/2309), but as I soon discovered, this estimation was far from accurate.

Calculating the true probability of collisions

Diving into the mathematics of selecting unique items

Given that there’s a uniformly distributed set of n items, and k random selections from that set, what’s the probability of any 2 of the k items being the same?

How do we do that exactly?

I can share what I did!

To determine the true probability of encountering duplicate solutions, I began by calculating the probability of selecting unique solutions and then subtracting this value from one. I knew that the probability of selecting one unique item from a set of n items was independent of the total number of selections (k).

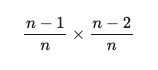

The probability of selecting two unique items from a set of n items can be represented as

Similarly, the probability of selecting three unique items from a set of n items is:

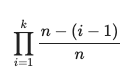

By using mathematical induction, I derived a formula for the probability of selecting k unique items from a set of n items

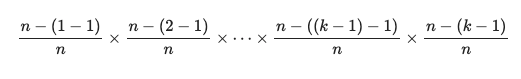

Expanding this formula, I arrived at:

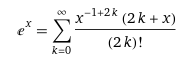

Using Taylor’s expansion:

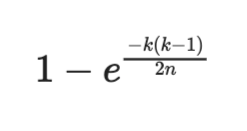

and the Epsilon-Delta proof, I have an approximate probability of selecting k unique items from a set of n items:

This can further be approximated to k²/2n. However, this approximation only works when k(k-1)/2n is very small (e.g., 0.1 or less). Since k = 50 and n = 2309 in our problem, k(k-1)/2n was actually slightly over 1/2, making this approximation inapplicable.

The surprising result

Sometimes optimizations we think of end up not being optimizations at all

After calculating the actual probability of encountering duplicate solutions in the first 50 games, I found that there was a substantial 40% chance of this occurring. This surprising result made it clear that my proposed “optimization” strategy carried a significant risk of causing my bot to lose games.

Given this information, I decided against implementing the optimization. Instead, I continued working on other ways to improve my bot’s performance while minimizing the risk of failure.

Embracing the unexpected lessons in probability

The value of diving deep into numbers and probabilities

This wordle bot competition taught me an important lesson about probabilities and when “optimizations” can backfire. By diving deep into the problem and calculating the true probability of collisions, I was able to make a more informed decision about the best strategy for my bot. This experience not only improved my understanding of probability but also highlighted the importance of thoroughly analyzing a situation before implementing a solution.

This exploration of probability in the context of the wordle bot competition serves as a reminder that seemingly simple problems can reveal complex and fascinating mathematical concepts. By thoroughly examining the potential risks and rewards of various strategies, we can make better-informed decisions and improve our overall understanding of the world around us. So, whether you’re tackling a game of wordle or facing a real-world challenge, don’t shy away from delving into the numbers and probabilities that underlie your situation — you might just learn something unexpected and valuable along the way.